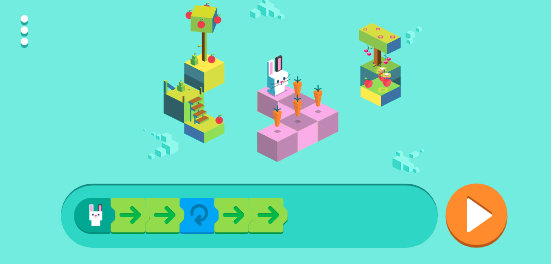

A Google Doodle Game published on Dec 4, 2017 in celebration of “kids coding” looks like this:

The object of this game is to arrange the instruction blocks (hop-forward,

turn-left, etc.) in a sequence such that the bunny following the instructions

can eat all the carrots.

Unsurprisingly, you only find out if your program works after you press the play button. Why is that? Why no immediate feedback of what the bunny will do as you build the sequence? Is it not feasible? Of course it is! The real reason there is no feedback is because that’s not how we think about programming.

The “usual business” of programming

Correctly predicting what the computer will do, given some source code, is considered the usual business of programming. This involves thinking about things like:

- how a function, line by line, will transform specific values that pass through

- what a generic class will become, when fused with a concrete data type

- how specific modifications will change some runtime behavior

Lets look at it a little deeper—

In other words, we simulate the computer in our head, while sitting in front of a computer—a powerful simulation machine.

The irony is deep here, but I completely missed it for the majority of my programming lifetime. The joy of programming was largely the joy of puzzle solving—the correct solution being the accurate prediction of the behavior of some code. This fun kept me from seeing the real purpose of programming—it’s not making and solving puzzles, however elegant or clever—it’s something else.

The idea that a program is a description to be mentally simulated is deeply entrenched—but is it a good idea?

Batch oriented or interactive?

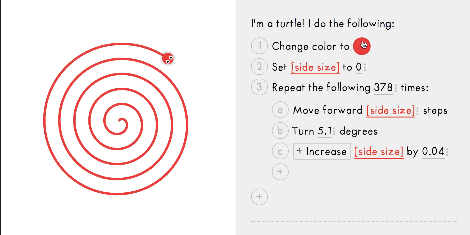

Contrast the “batch oriented” programming workflow used by the Google game above, to the interactive experience in Joy JS.

Here, there is immediate visual feedback as you modify your program. If you've ever used the “developer tools” tab in a web browser to modify some code and see the effect live, you're doing this live interactive programming as well. Taking this idea further, we can manipulate the effect directly, and have the program update at the appropriate places.

The interesting question is how far can we take this idea? Will this just work for little toy programs? Is it only applicable where the final effect is a drawing?

I submit that any system behavior you can simulate in your head can also be simulated, more precisely, by the computer itself.

So I think live programming is possible not just in small, simple programs but also in large, multi-process systems. I believe what’s holding us back is the choice of core constructs in the design of programming languages and systems (the “systems” is important here because you want the effect simulation to span all parts of the system, across all interconnected “processes”, not just within one “program”). Our systems and languages are not designed to be interactively evaluated and explored. We're very far from designing or thinking about systems that can interactively show us the effects of any program modification.

Consider your typical “programming language” that provides this experience:

- Write large piece of text—the “program”—while mentally simulating the code

- Submit to computer

- Evaluate effect

This is rooted in batch style programming. There are tools and techniques to incrementally improve this experience, for instance by automating the generation of the source text—but is the fundamental model here even a good one? Of particular interest is the complexity added in #3 above, when you consider the combined effect in a system containing two or more OS processes with distinct programming languages and toolchains.

Interestingly, Smalltalk environments from the 70s explored a more interactive and live model than what I describe above, but the batch model is still predominant today, in spite of the increase in computing power.

How much of our mental simulation could be offloaded to the computer, if we choose suitable abstractions to represent systems and programs? Shouldn’t computers simulate and visualize, to a very large degree, the interesting effects and behaviors of our programs, as we create them?

Related

- Nicky Case’s Joy JS is a fun interactive, programming tool for short drawing programs.

- Jonathan Edward's Subtext programming language

is a series of research projects trying to radically simplify application programming.

The starting point / manifesto —Subtext 1—

introduces a programming view where values are visible and called functions are expand inline

as part of "overt semantics". An excerpt from the

paper:

Overt semantics dispels the mystery of debugging. There is no need to guess at what happened inside the black box of run-time: debugging becomes merely browsing the erroneous execution, which is a copy of the program.

- Learnable Programming by Bret

Victor discusses how various program aspects such as “time” and “flow” could be

made tangible and visible. Bret has talked and written quite a bit on the

theme of live and tangible programming. One of the more famous talks is

Inventing on Principle described

here.

An excerpt:

you have to imagine an array in your head and you essentially have to play computer... the people we consider software engineers are just those people we consider really good at "playing computer"

- Eve was a multi-year project (discontinued since January 2018) that explored the space of making programming accessible to all humans and providing a live, tangible experience.

- Lamdu aims to “create a next-generation live programming environment that radically improves the programming experience”.

- Observable is a new (as of January 2018) notebook style web-based reactive programming environment. All the visualizations and formula added to the notebook are updated live as you update any cell values.

- Usable Live Programming is a paper by Sean McDirmid that discusses some specific ideas—probes and traces—that could usefully integrate live programming within a typical code editing experience. An interesting paper by Sean McDirmid and Jonathan Edwards is Programming with Managed Time. I think managed time is one core aspect of live programming and I hope to cover that topic in the future. For some more ideas see Live Programming as Gradual Abstraction.

Summary

The following is an imagined experience that I believe is technically feasible, and should be the usual way of working, but sadly isn’t even close to what is available today.

Whenever I make some change to a program, I’m really trying to affect some

behavior in the system.

For a follow up with a more concrete position, see Offload Mental Simulation.